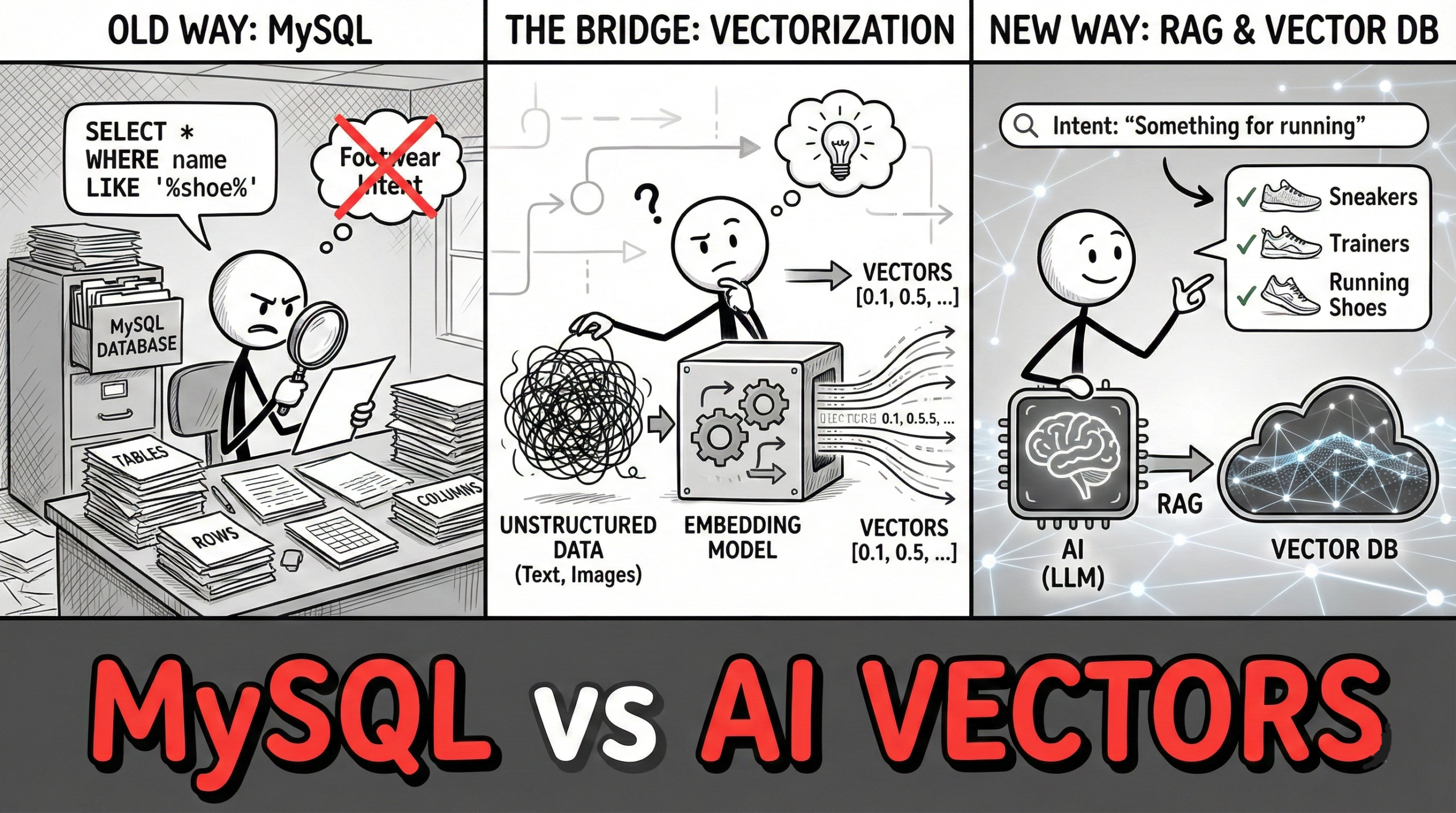

In the world of web development, MySQL is the reliable workhorse. It holds our user tables, our inventory lists, and our transaction logs with rock-solid consistency. But as we enter the era of Generative AI, we are facing a new problem: Relational databases are designed to find exactly what you typed. AI needs to find exactly what you meant.

If you are trying to build an AI architecture—like a chatbot or a recommendation engine—on top of a standard MySQL backend, you are likely trying to fit a square peg into a round hole. Here is why you should leave MySQL for the accounting department and switch to Vector Databases and RAG for your AI.

The Problem: The “Exact Match” Trap

To understand why MySQL struggles with AI, you have to understand how it searches.

When you run a query like SELECT * FROM products WHERE name LIKE '%shoe%', MySQL scans the text for that specific string of characters. It is binary: it is either a match, or it isn’t.

But AI doesn’t think in keywords; it thinks in concepts.

If a user searches for “footwear for running,” a standard MySQL query looking for “shoe” will return zero results. The database doesn’t know that “footwear” and “shoe” are semantically related. To make MySQL understand this, you would have to write massive lists of synonyms or complex fuzzy-search logic that becomes slow and unmanageable at scale.

Why Traditional SQL Struggles with AI Data

- No Semantic Understanding: It cannot natively calculate that “King” – “Man” + “Woman” = “Queen.”

- Performance Bottlenecks: Calculating the “similarity” between millions of data points requires complex math (cosine similarity) that standard SQL engines are not optimized to perform in real-time.

- Rigid Schemas: AI data is often unstructured (text chunks, images, audio), which fights against the strict row-and-column structure of relational tables.

Note: While newer iterations like MySQL HeatWave are adding vector capabilities, the core architecture of relational databases is still optimized for transactions (ACID compliance), not the high-dimensional mathematical proximity searches required by AI.

The Solution: Vector Databases

If MySQL is a filing cabinet, a Vector Database (like Pinecone, Weaviate, or Milvus) is a 3D map of meaning.

Instead of storing text as just text, these databases store Embeddings. An embedding is a long list of numbers (a vector) that represents the meaning of a piece of data. When you store data this way, “Dog” and “Puppy” land very close to each other on the map, while “Table” lands far away.

Why Vector DBs Win for AI

- Semantic Search: You can search for “something to wear on my feet” and the database will return “Sneakers,” “Boots,” and “Sandals” because they are mathematically close in the vector space.

- Speed at Scale: They use algorithms like HNSW (Hierarchical Navigable Small World) to navigate millions of vectors in milliseconds, something that would bring a standard

SELECTquery to a grinding halt. - Multimodal: They can store vectors for text, images, and audio in the same space, allowing you to search for an image using text.

The Architecture: Retrieval-Augmented Generation (RAG)

So, you have a Vector Database. How do you use it? This is where RAG comes in.

One of the biggest risks with Large Language Models (LLMs) like GPT-4 is hallucination. If you ask an LLM about your company’s private data, it will either say “I don’t know” or make something up.

RAG (Retrieval-Augmented Generation) is the architecture that solves this. It bridges your private data and the public AI.

How RAG Works:

- The User asks a question: “What is our refund policy for socks?”

- The Retrieval (Vector DB): The system converts that question into a vector, searches your Vector Database for the most relevant documents (e.g., your PDF policy docs), and retrieves the specific paragraph about “refunds.”

- The Augmentation: The system pastes that paragraph into the prompt sent to the AI.

- The Generation: The AI reads the retrieved paragraph and answers the user accurately: “According to your policy, socks can be refunded within 30 days if unworn.”

Why this beats MySQL

In a standard MySQL setup, you would have to hope the user used the exact keywords appearing in your policy document. With RAG + Vector DBs, the system understands the intent of the question and retrieves the right answer, even if the wording is completely different.

MySQL is fantastic for structured, transactional data—don’t throw it away. But for AI applications, it is simply the wrong tool for the job.

By moving to a Vector Database and a RAG architecture, you move from keyword matching to true understanding. You enable your application to see relationships between data points that strict rows and columns simply cannot capture.

Here is the comparison table outlining the key technical and functional differences between a traditional relational database (MySQL) and a specialized vector database (Pinecone).

MySQL vs. Pinecone: Feature Comparison

| Feature | MySQL (Relational DB) | Pinecone (Vector DB) |

| Primary Data Unit | Rows & Columns. Data is structured in predefined tables with strict schemas. | Vectors (Embeddings). Data is stored as high-dimensional arrays of numbers representing meaning. |

| Search Mechanism | Keyword / Exact Match. Uses B-Tree indexes to find exact strings or ranges (e.g., WHERE name = 'Shoe'). | Semantic / Similarity Match. Uses Approximate Nearest Neighbor (ANN) to find “closest” meanings (e.g., “Footwear” $\approx$ “Shoe”). |

| Indexing Algorithm | B-Tree / Hash. Optimized for fast retrieval of specific records. | HNSW (Hierarchical Navigable Small World). Optimized for navigating complex, high-dimensional spaces quickly. |

| Query Language | SQL (Structured Query Language). A standardized, declarative language. | API / SDKs (Python, Node.js, etc.). Queries are typically JSON objects sent via REST or gRPC. |

| Unstructured Data | Poor. Must be stored as BLOBs or long text fields; difficult to query effectively without full-text search extensions. | Native. Designed specifically to handle embeddings generated from unstructured text, images, and audio. |

| Performance Context | Transactional (OLTP). Best for 100% accuracy, strict consistency (ACID), and banking/inventory systems. | AI Retrieval. Best for ranking, recommendation, and finding context for LLMs where “closest match” is better than no match. |

| Scalability | Vertical Scaling. Often requires bigger servers to handle more load; sharding is complex. | Horizontal Scaling. Cloud-native architecture designed to distribute vector indexes across many nodes easily. |

Key Takeaway

- Use MySQL when you need to know exactly how many socks you sold yesterday.

- Use Pinecone when you need to find which products a customer might like based on their previous vague descriptions.